Best AI Accessibility Tools in 2026: A Detailed Review Guide

An estimated 3.5 million students with disabilities are currently enrolled in college in the US and that number continues to grow as populations age and awareness increases.

But many content authoring professionals and instructional designers still treat accessibility as a final checkbox rather than a foundational design principle.

AI-powered accessibility tools are changing this.

These tools catch issues during creation, automate time-consuming tasks like captioning, and help ensure your final product meets digital accessibility guidelines without disrupting your workflow.

To help you decide which tool is best for your requirements, I reviewed the most talked-about AI accessibility tools. I selected a variety of tools aimed at educators, content creators, designers, and, of course, students and users.

Table of Contents

- What is an AI Accessibility Tool?

- How I Tested & Reviewed Each AI Accessibility Tool

- Top AI Accessibility Tools

- Key Types of AI Accessibility Tools & Real Use-Cases

- Trends & Future of AI in Accessibility

- AI Accessibility Tools FAQs

Quick Reads

- AI accessibility tools use artificial intelligence to improve digital accessibility and fall into two categories: creator tools (for building accessible content) and assistive technologies (for users with disabilities).

- Discover eight leading AI accessibility tools, including Visme, Stark, UserWay, Deque/Axe, Equidox, JAWS, Meta Ray-Ban Glasses and Voiceitt.

- Creator tools like authoring platforms and PDF remediation software help build accessible content, while assistive technologies like screen readers, eye trackers and speech-to-text tools help users access it.

- Use our decision-making checklist to help you choose an AI accessibility tool for your institution or learning platform. We cover items like your core use case, current workflow, accessibility compliance requirements and cost analysis.

- Towards 2026, AI-powered accessibility is shifting from novelty to baseline expectation, multimodal AI is making assistive tech more powerful and increased personalization is making accessibility solutions more effective for individual users.

- Create accessible content and streamline the design process with Visme's AI accessibility features, including text-to-speech and closed captioning tools.

- Once the final project is ready, deploy the accessibility checker to test your design and export SCORM/API packages for seamless LMS integration.

What is an AI Accessibility Tool?

An AI accessibility tool is any type of software or hardware that uses AI to improve accessibility. These tools are separated into two categories: for creators and for users.

The tools for creators support the design process by helping develop accessible products from the start. They integrate into your existing workflows to save time from remediations later on.

Tools for users are those that help people with disabilities access content for education, work and entertainment. Some of these are software, while others are physical contraptions that work with the person’s body.

Some of these tools include:

- Digital PDF and web accessibility checkers: Tools that scan PDFs and or websites to pinpoint accessibility issues. Some tools make the changes automatically, while others only suggest them.

- Accessibility widgets: These are browser extensions that can scan any website in a browser and either highlight accessibility issues or make cosmetic changes to help people with disabilities access the content.

- Screen readers: Software and applications that scan text in digital documents, websites, emails, etc and read out the content in an audible voice.

- Speech-to-text generators: These are tools that will take a text snippet and turn it into spoken audio. There are typically many voices to choose from, along with customization options via a detailed prompt.

How I Tested & Reviewed Each AI Accessibility Tool

There are many accessibility tools available for both creators and users — too many to include in a single short list. The majority of them are also difficult to test without handing over your credit card details.

For this guide, I did the following:

- For creator tools, I checked out all the best accessibility tools to see if they offered AI-powered accessibility features.. Then, I scanned the list of web accessibility tools on the Web Accessibility Initiative (WAI) website.

- For assistive technology, I prioritized tools that document user impact, recent innovations and where AI is the key differentiator.

- Researched each tool as much as possible without paying, by watching countless videos from their brand YouTube channels and reading reviews.

*Disclaimer: The comparisons and competitor ratings presented in this article are based on features available as of 13th November 2025. We conduct thorough research and draw on both first-hand experience and reputable sources to provide reliable insights. However, as tools and technologies evolve, we recommend readers verify details and consider additional research to ensure the information meets their specific needs.

Top AI Accessibility Tools List and Comparison Guide

| Key AI Accessibility Features | Best For | Use Cases | Pricing | G2 Rating | |

| Visme | AI Text-to-Speech, AI Captioning | Instructional designers creating training materials, designers and marketers creating presentations, infographics and documents. | Creating accessible course presentations with SCORM/xAPI export for LMS integration | Free Plan, Paid plans start at $12.25/month. | 4.5/5 (450+ Reviews) |

| Stark | AI Sidekick with real-time suggestions, auto-generated alt-text suggestions, and contrast checking | Design teams building course interfaces and learning platforms | Real-time accessibility feedback during UI/UX design for educational apps | Free Plan, Paid plans start at $21/month | 4.5/5 (80+ Reviews) |

| UserWay | AI-powered accessibility widget, live translation in 35 languages, integrated screen reader | Educational institutions needing website accessibility without extensive dev work | Adding accessibility overlay to college/university websites and learning portals | Plans start at $490/year | 4.8/5 (600+ Reviews) |

| Deque/Axe | axe MCP Server, axe Assistant, axe Dev Tools Extension | Developers building custom platforms or apps | Comprehensive accessibility testing throughout the development lifecycle | Free Plan, Paid plans start at $60/month | N/A |

| Equidox AI | Fully automated PDF remediation, batch processing, and AI-powered tagging | Institutions converting large PDF libraries into accessible formats | Bulk conversion of documents to be compliant with ADA standards | Upon Request | N/A |

| JAWS | Picture Smart AI for image descriptions, FS Companion AI assistant for help while using JAWS and other tools | Users who are blind or have low vision, accessibility testers | Screen reading for learning materials, research papers, browsers and online platforms | Plans start at $623/year | N/A |

| Meta Ray-Ban Glasses | Be My Eyes integration, Detailed Responses for scene descriptions | Users needing hands-free assistance for navigation and comprehension | Real-time text reading, navigation assistance, and visual scene descriptions | $329-$549 depending on style | N/A |

| Voiceitt | Personalized speech recognition, live captioning for video calls | Users with non-standard speech patterns; inclusive video conferencing | Enabling students with speech disabilities to participate in virtual classes and discussions | Plans start at $49.99/month | N/A |

1. Visme AI Text to Speech and AI Captioning

G2 Rating: 4.5/5 (450+ Reviews)

Visme is a content authoring tool that has a wide collection of AI tools that support design, development and delivery of content. The platform also offers several accessibility functions to help teams of all sizes create inclusive content like training materials, documents, presentations and more.

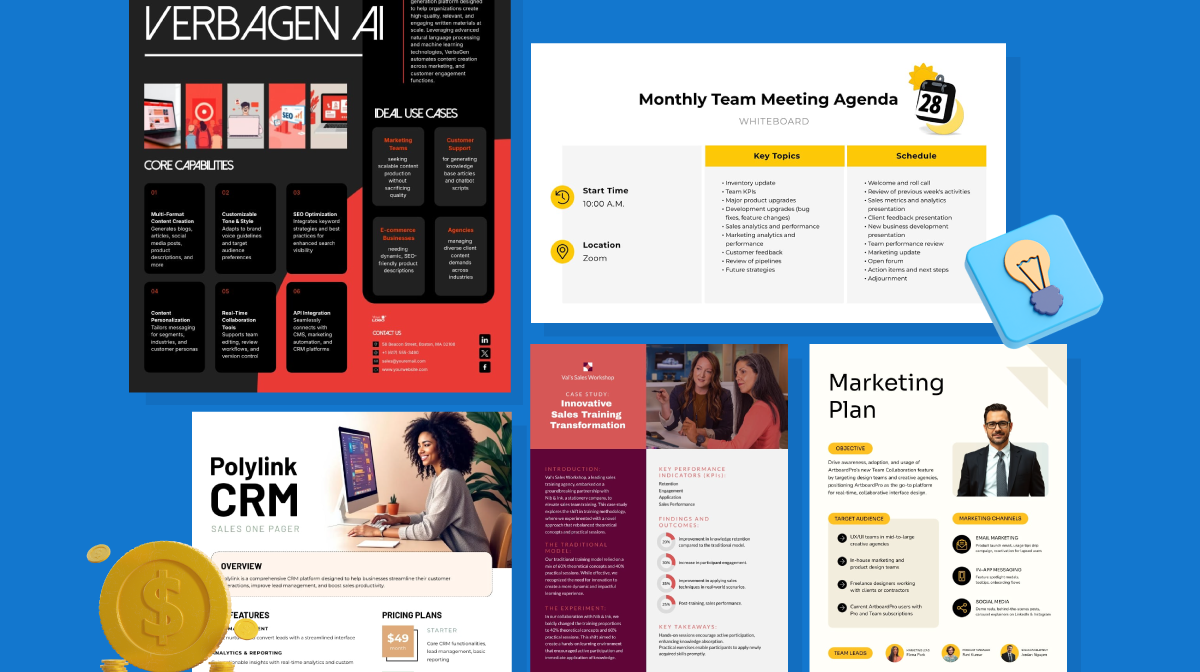

Inside Visme’s AI hub, you’ll find the Text-to-Speech and AI Captioning; two AI accessibility tools backed by OpenAI technology. These tools offer accessibility to users with either hearing or vision impairments.

I tested the text-to-speech first, with a Visme template about Go-to-Market Strategies. My plan was to use the text already in one of the slides and generate the audio from it. Once saved, I planned to add it to a hotspot and add a relevant pointer.

In practice, I was successful. But it turns out that an audio hotspot already has the option to show a blue marker. The only setback is that I wasn’t able to move the marker to another spot in the canvas. I wanted to place it to the right of the text instead of the left. But all in all, a good result.

Below is what I achieved. Click on the blue arrow marker to hear the audio. I used two different female voices to compare. This is just a small example; you can apply it to any design, big or small..

Stand Out Features of Visme’s Text-to-Speech

- Ease of use: It’s super easy to turn text into an audio clip

- Audio Library: Audios are quickly saved into a library to use in pop-up

- Voices: I like that there were several voices to choose from but I would have like to see more variety.

- Customization: It’s easy to further customize a voice’s tone, accent and speed by writing a text prompt.

- Languages: The tool detects what language you enter. I tested both English and Spanish.

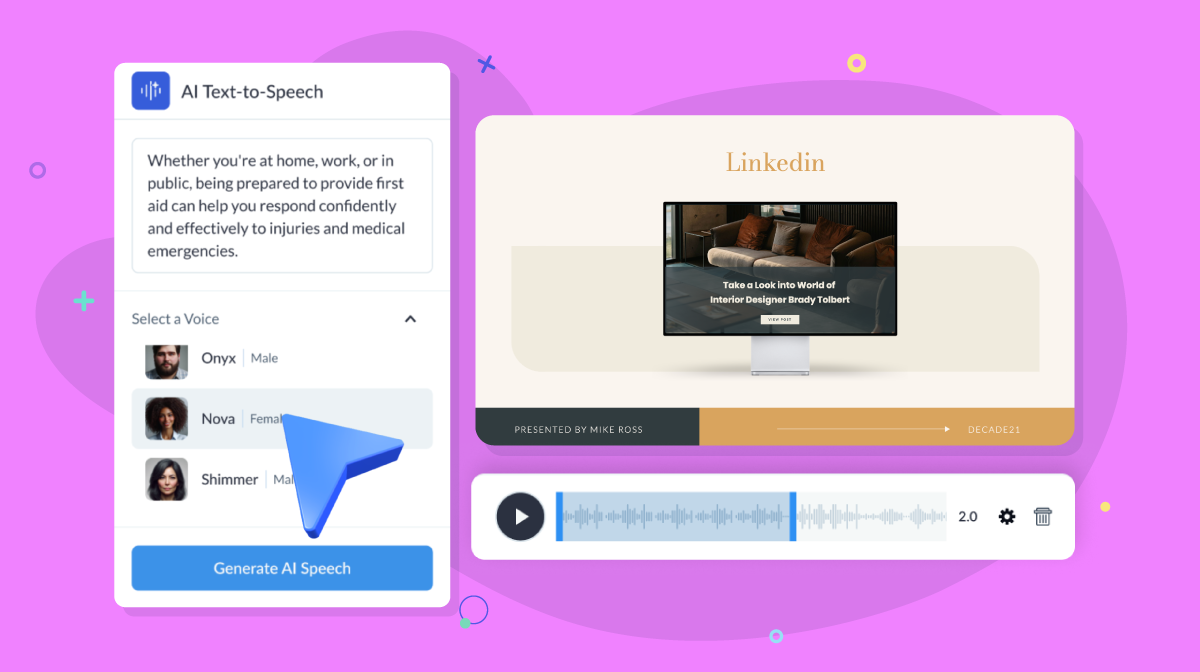

The second AI Accessibility tool in Visme is also quite practical. I’m talking about AI-powered captioning. You can activate captions for any video or audio that features someone speaking. The option is inside the hamburger menu, inside the accessibility drop-down. Once you activate the closed captions setting and it’ll work for your entire project.

The generated Captions will display in both the Editor and Player. But remember that it’s not suitable for videos from external platforms like YouTube or Vimeo. In such cases, you’ll need to activate captions directly in the video platform.

I tested the AI-powered captioning feature on a vertical video in my library. It worked quite well, grasping the nuance in the voice. What bothered me was that the text came out very small. So small that for Instagram videos, per se, it’s probably better to just add the captions inside Instagram itself.

Below is the vertical video with the small captions. I find them way too small. I wish they’re size was adjustable.

I then tested the feature in a horizontal presentation project, and there, the captions did appear larger. Therefore, this captioning tool will work great for videos in your presentation slides.

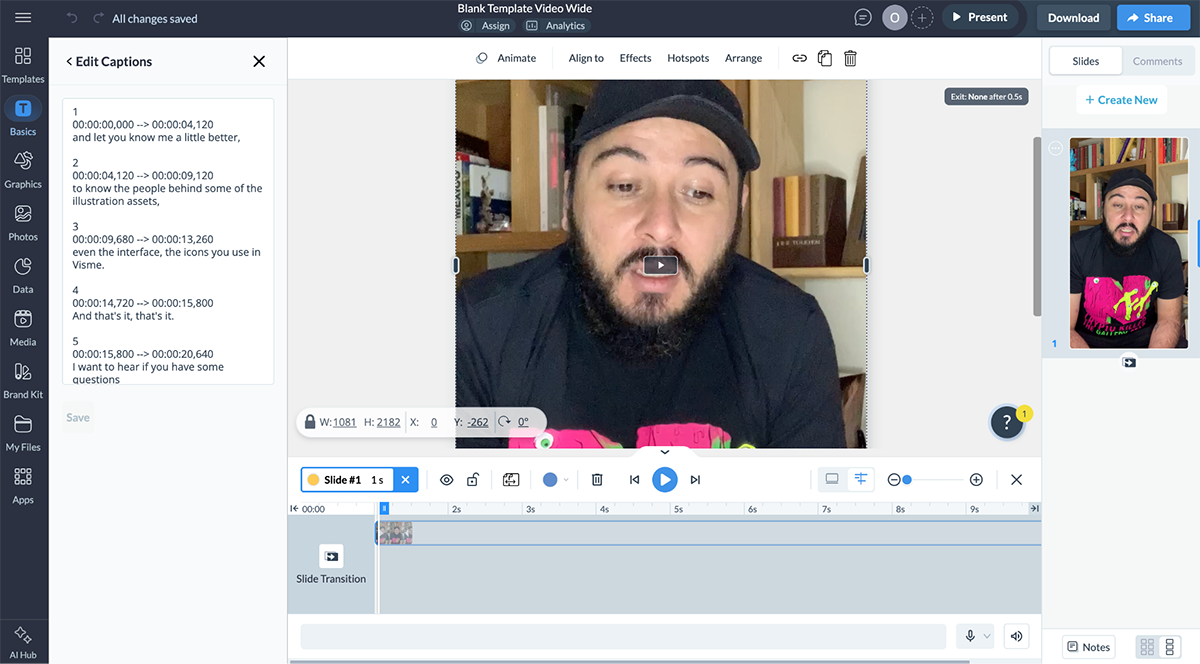

After your captions are generated, you can check that they’re accurate and edit them if necessary. To do so, first open the Timeline. You’ll find it in the top left hamburger menu.

Then, in the timeline, click the pencil icon in the top left, then right-click the clip with the captions you want to edit and choose Edit Captions.

Stand Out Features of Visme’s AI Captioning

- Capabilities: Captioning is available for video and audio. You just have to set it up and it’ll work on both.

- Customization: I found this feature reasonably practical, because captioning is powerful but isn’t always perfect. After generation, you can edit the caption to be an exact match.

- Timeline Synchronization: When a project has captions activated, they sync up with the video or audio they’re pulling from. You can see this in the timeline at the bottom of the editor.

In addition to these specific AI-powered features, Visme also offers a range of accessibility tools to help you create inclusive content for all audiences.

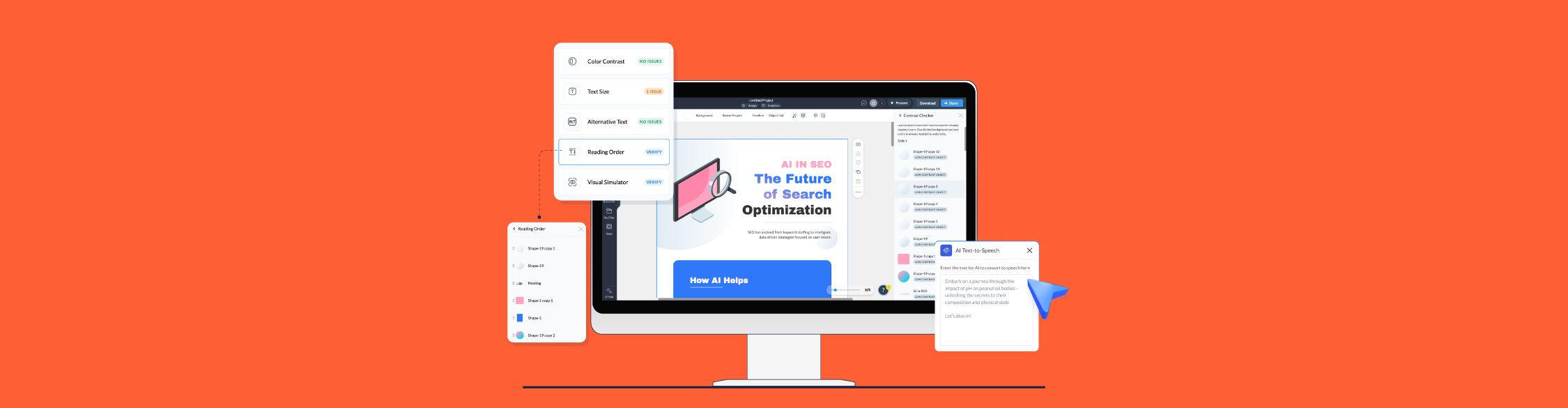

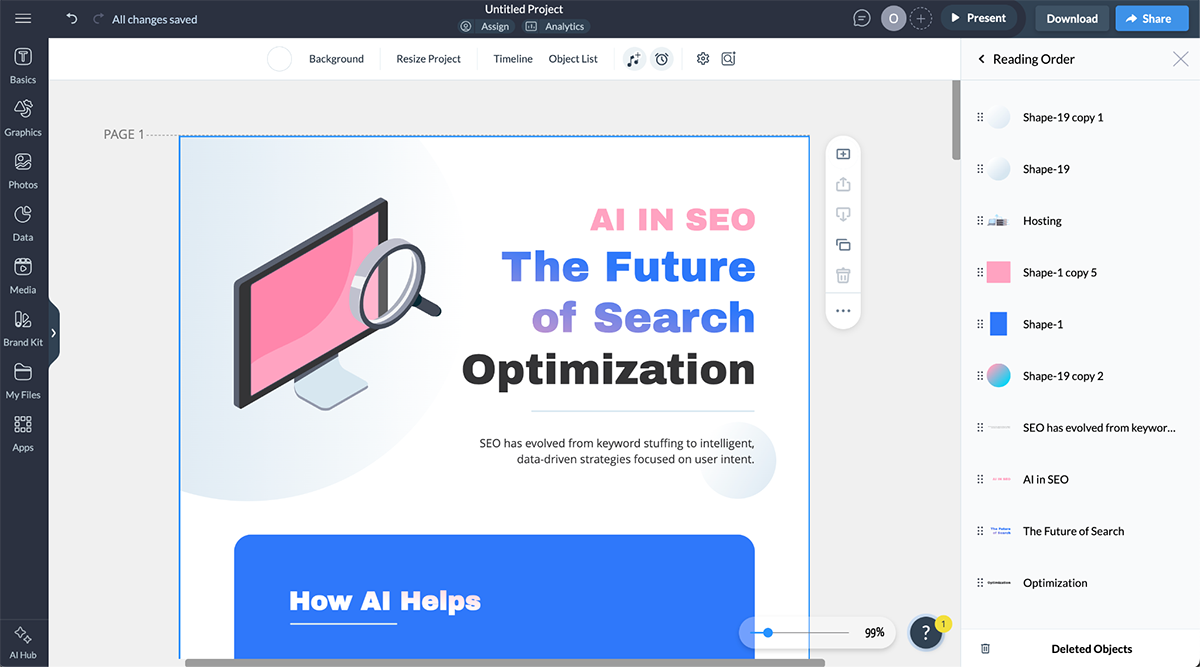

First, the Accessibility Checker in the editor scans your design and points out issues to fix. It reviews these issues:

- Color contrast: Evaluate contrast levels and adjust objects with low contrast.

- Text size: Assess text that’s smaller than 18px, which might be hard to read.

- Alternative text: Identify objects missing descriptions for screen readers.

- Reading order: Display the sequence in which screen readers will interpret your design.

- Visual simulator: View and adjust your design for various visual impairments.

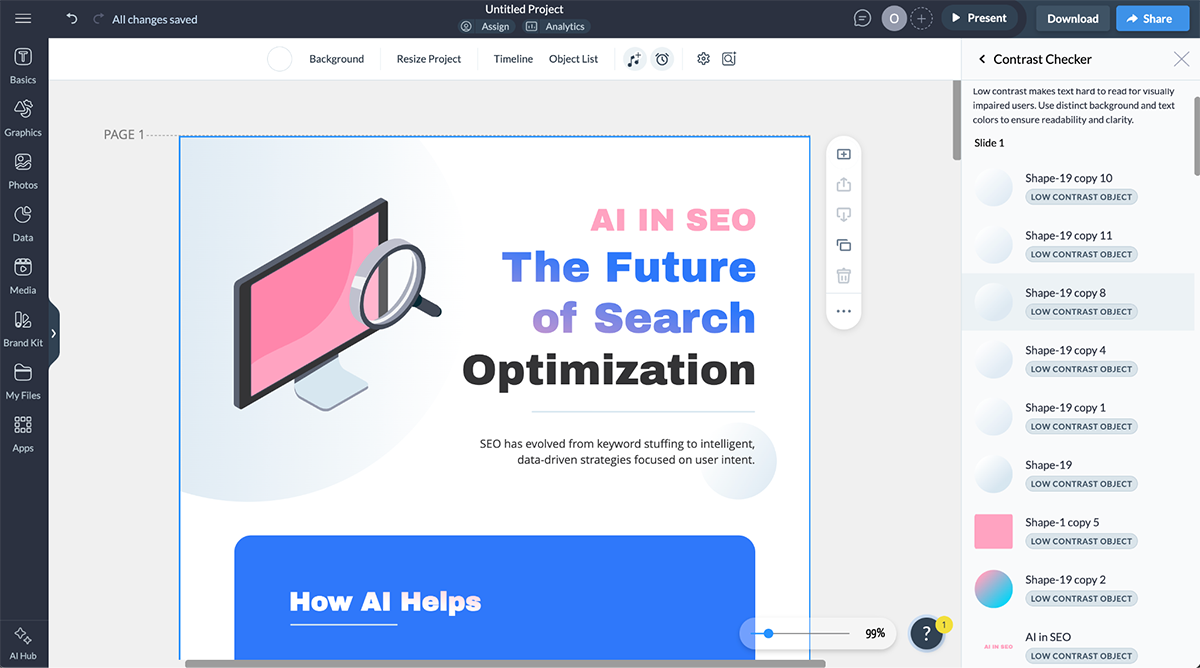

In the screenshot below, I’m testing the contrast checker for this infographic template from our library. It’s flagging many subtle shapes. These results prompt me to share with you that it’s not always necessary to fix everything. Because if you did, the design would become cluttered. The elements you most certainly must fix are things like text and icons, elements that support comprehension. Subtle background shapes can stay subtle.

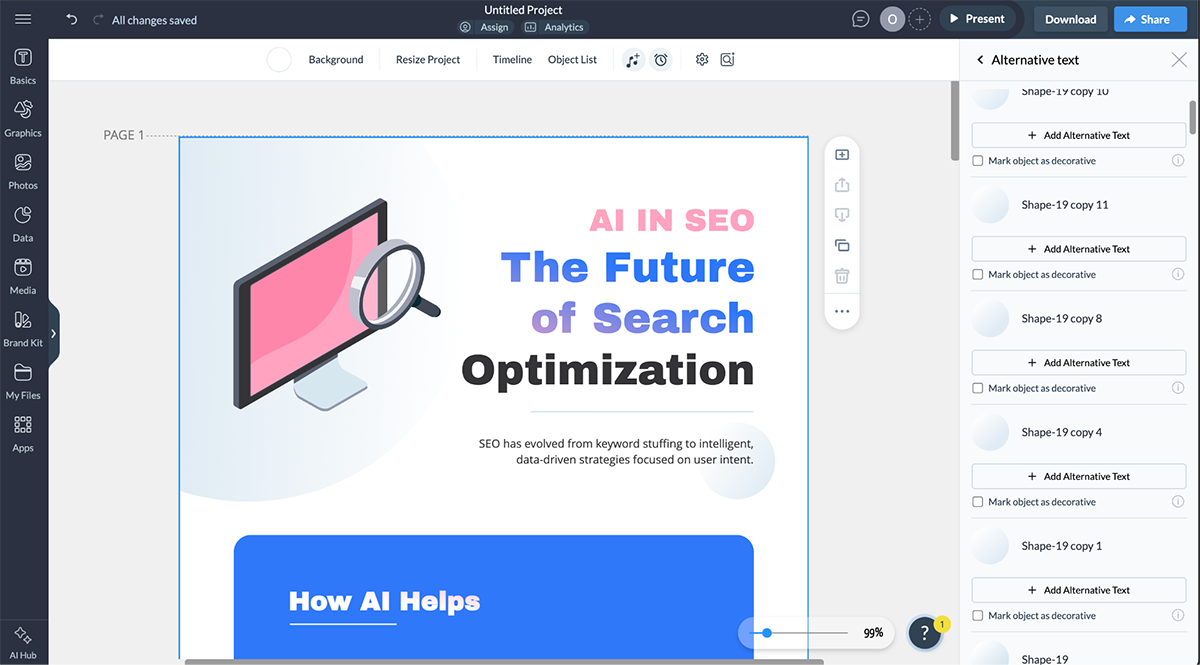

The next screenshot flags all visual assets without Alt Text. It’s showing all the shapes in the design again. Keep in mind that when a visual has Alt Text, a screen reader will read it.

So, imagine if every subtle bubble had Alt Text; it would hurt the comprehension of your content. Add Alt Text to images that the user needs to know about; don’t worry about decorative ones.

Finally, I also tested the Reading Order tool. This one shows all the elements in the slide in the order that a screen reader would read them. You can reorder them if the system placed them in the wrong order. Once again, all shapes in the design are showing in the Reading Order list. If they don’t have Alt Text, screen readers will ignore them.}

But, if a student or user employs the Picture Smart AI tool with JAWS, it will generate a description for each visual, regardless of whether you added Alt Text or not. So, to be safe and ensure accessibility with any tool, remove decorative elements from the Reading Order. They’ll still be in your design, but won’t ping the screen readers at all.

Additionally, these other tools will help you design better accessible content for students and general users:

- Accessible fonts: In the Visme editor, you’ll find lots of fonts that are accessible for both screen and print projects

- SCORM and xAPI export: Any of your finished Visme projects can be exported in LMS-friendly formats, making it easy to add them to your platform

- Hotspots and pop-ups: These interactive content elements display videos and generated or recorded audio to support a wider range of comprehension styles within the same slide or page

Other Visme Features

Besides being an AI accessibility tool, Visme also offers a wide range of AI and non-AI features for creating all-around great content:

- Brand Wizard: Pulls your brand assets from your website URL; the system automatically creates a brand kit and a set of branded templates

- Data visualization tools: Easily create accessible charts and graphs to add to all sorts of projects that share numerical information

- Visme Forms: And integrate form creator for making lead forms, email forms, and popup forms.

- AI Edit Tools: These AI-powered tools will help finesse images and visuals in your projects

- Social Media Scheduler: Publish and schedule your accessible social content created with Visme directly from the dashboard

- Visme AI: Take advantage of the comprehensive generative AI suite that will help you create first-draft designs of printables, invoices, LinkedIn posts, pitch decks, ebooks, proposals, and more

- AI Resize Tool: Turn any project into another dimension for use on a different platform

- AI Image Generator: Create unique visuals for your projects

Check out this video from our YouTube channel, where you’ll learn how to create interactive training presentations with Visme:

Best for

Small, midsize and enterprise businesses, nonprofits, entrepreneurs and educators.

Pricing

- Basic: Free

- Starter: $12.25/month

- Pro: $24.75/month

- Visme for Teams: Request for pricing

Note: Visme offers discounted pricing plans for students, educators and nonprofits.

2. Stark AI Sidekick

G2 Rating: 4.5/5 (80+ Reviews)

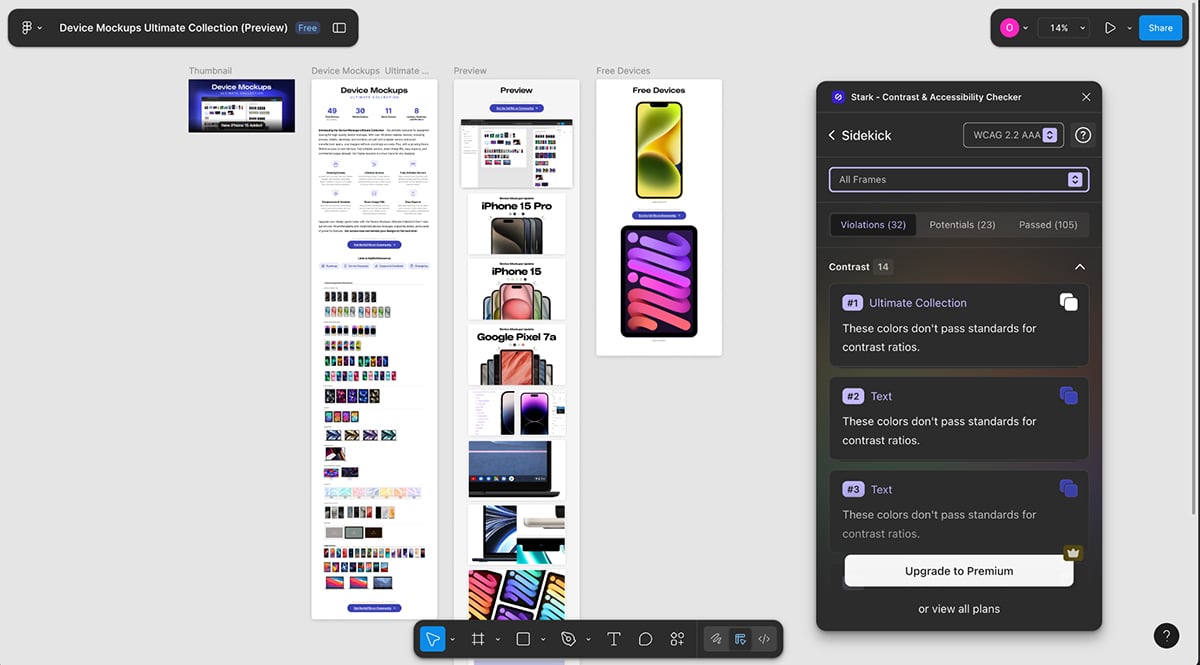

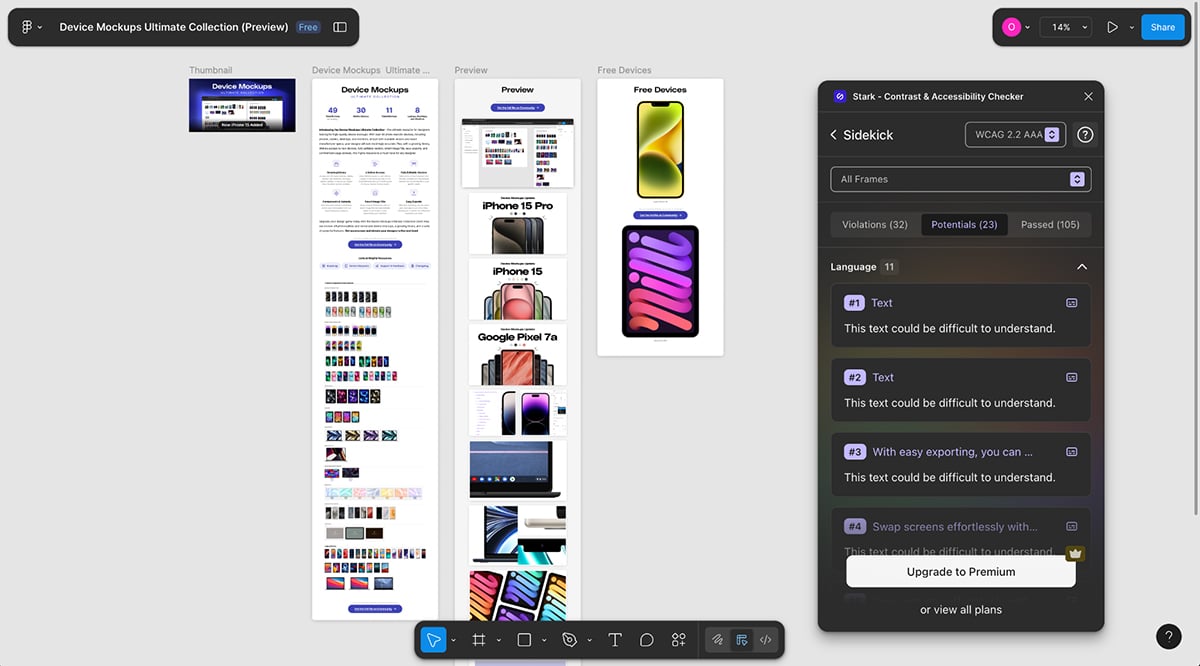

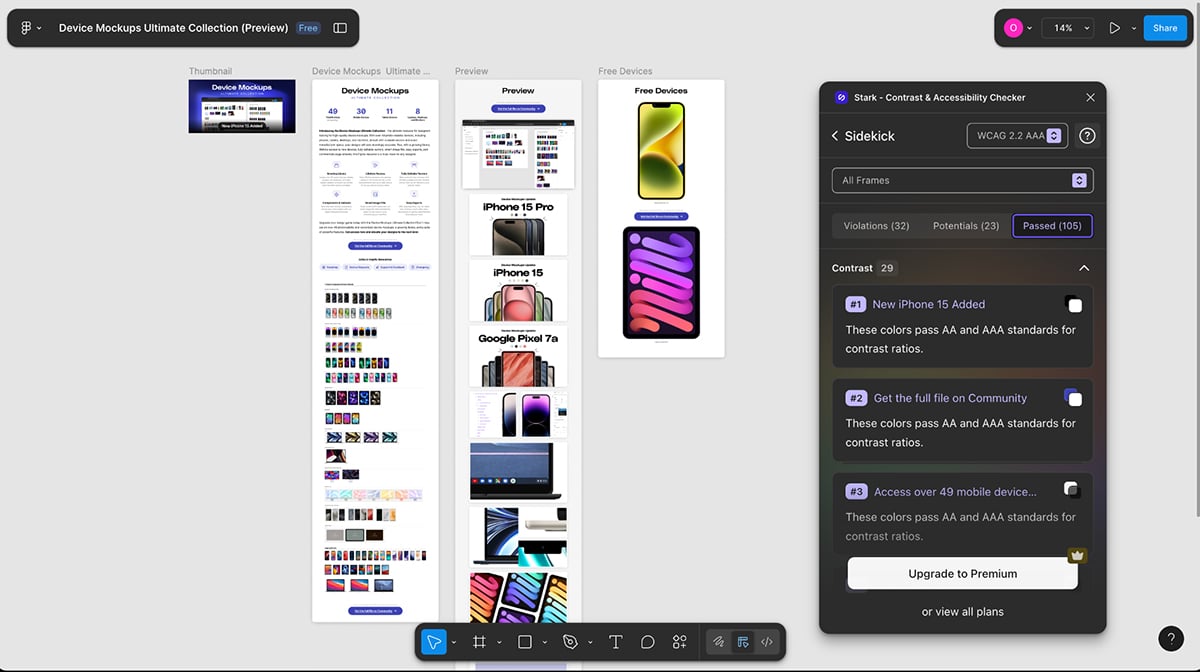

Stark is an end-to-end accessibility platform that integrates into existing workflows in Figma and Sketch. Their AI Sidekick checks your ongoing work and provides fast accessibility suggestions, such as color corrections to achieve proper contrast ratios, auto-generated alt text for images, better font sizes, and more. In the suggestions list, click an issue to jump to the corresponding Stark tool and view detailed suggestions for fixing it.

What’s special about Stark is that the AI doesn’t make the changes automatically. It only suggests, leaving the final decision to the designer or developer.

I tested the Stark Sidekick Figma Plugin using a community file in my account. It’s quite simple. In the Figma canvas, go to the Figma logo, click the arrow next to it and select Plugins. If you’re signed into Stark, you’ll see it there; otherwise, use the search bar to find it and then sign in.

The plugin found several accessibility issues on my canvas: some regarding color contrast and others about the lack of alt text on the images. Unfortunately, without a paid subscription, I could only run the tool and not see the suggestions for each issue.

Regardless, I appreciate that all the issues, no matter what they are, are stacked together and easy to see. It’s not like with Visme, where you have to check contrast with one tool and alt text with another. Everything is all there, ready to go.

What’s similar to Visme, though, is that you can check for accessibility issues, section by section. But what Visme doesn’t offer is a full-project scan, unless, of course, you only have one slide.

In the three screenshots below, I scanned the full canvas. The first image shows the violations that need to be fixed. The second one highlights potential violations that aren’t complete violations but could still cause problems in some cases. The third image shows which elements passed the test. I appreciate that it includes a section for elements that passed inspection. Other tools focus only on what’s wrong, not on what’s right.

Stand Out Features of Stark’s Sidekick

- Automated scanning: The tool scans and outputs detailed AI-powered suggestions for contrast, Alt Text and more. All in compliance with accessibility guidelines

- Easy integrations: Sidekick offers several integrations like plugins for Figma, Sketch and Adobe XD, plus an integration with GitHub for code scanning

- Browser extensions: Additionally, they also offer browser extensions for Chrome, Firefox and Edge

Best for

Designers, developers and product managers managing accessibility across multiple projects. The platform is primarily for enterprise businesses and the professionals who work there.

Pricing

- Free Trial: Free (with credit card)

- Premium Personal: $21/month

- Premium Pack: Starting at $198/year

- Launch: From $2000 / year

- Grow: From $6000 / year

- Scale: $15,000 (year)

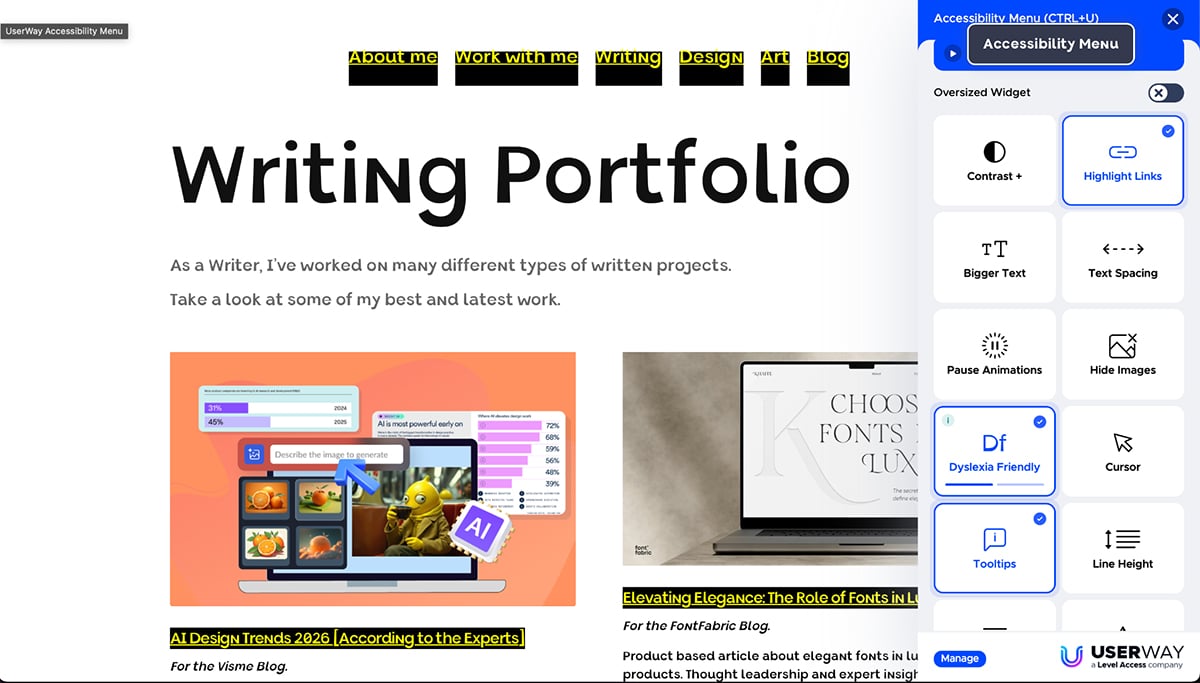

3. User Way AI Accessibility Widget

G2 Rating: 4.8 (600+ Reviews)

Aside from the usual features and tools available in a comprehensive accessibility suite, UserWay also offers an AI-powered accessibility widget. The idea is to install the widget on your website with a snippet of code so visitors can adjust their experience to suit their needs.

That’s exactly what I did. I installed the widget on my site and tested some of its features. In the image below you can see how I chose the site to display in Dyslexia font, for the links to be highlighted and for tooltips to appear. That said, I’m not completely sure how the Tooltips setting works since I didn’t see any changes.

Now that I’ve installed the widget on my site, I’m definitely leaving it there! I appreciate that my visitors have the option to display the site however they want.

Both Visme and UserWay offer accessibility checks, but only UserWay actually changes how the final design looks.

Stand Out Features of UserWay’s Widget

- Easy installation: Installing the widget into a website only needs a snippet in the <head> code of the site’s HTML. There’s also a UserWay plugin for WordPress

- Adaptability to visitor needs: Each user can adapt how a website looks by changing the following aspects:

- Font types and sizes for improved reading, including Dyslexia font

- Color saturation

- Hide or show images

- Show tooltips

- And more

- Customizable: You can adjust the widget for brand alignment

- Multilingual support: The widget works in 35 languages and offers live translation

- Usage statistics: With a paid UserWay subscription, you can track how many times the widget is used and how

Best For

Company websites for any industry, marketers looking for accessibility additions without extensive development

Pricing

- Trial of the Free Widget: Scans your site and gives simple results

- Small Website: $490/year

- Medium Website: $1490/year

- Large Website: Custom

4. Deque/axe MCP Server, Assistant and Dev Tools Extension

G2 Rating: N/A

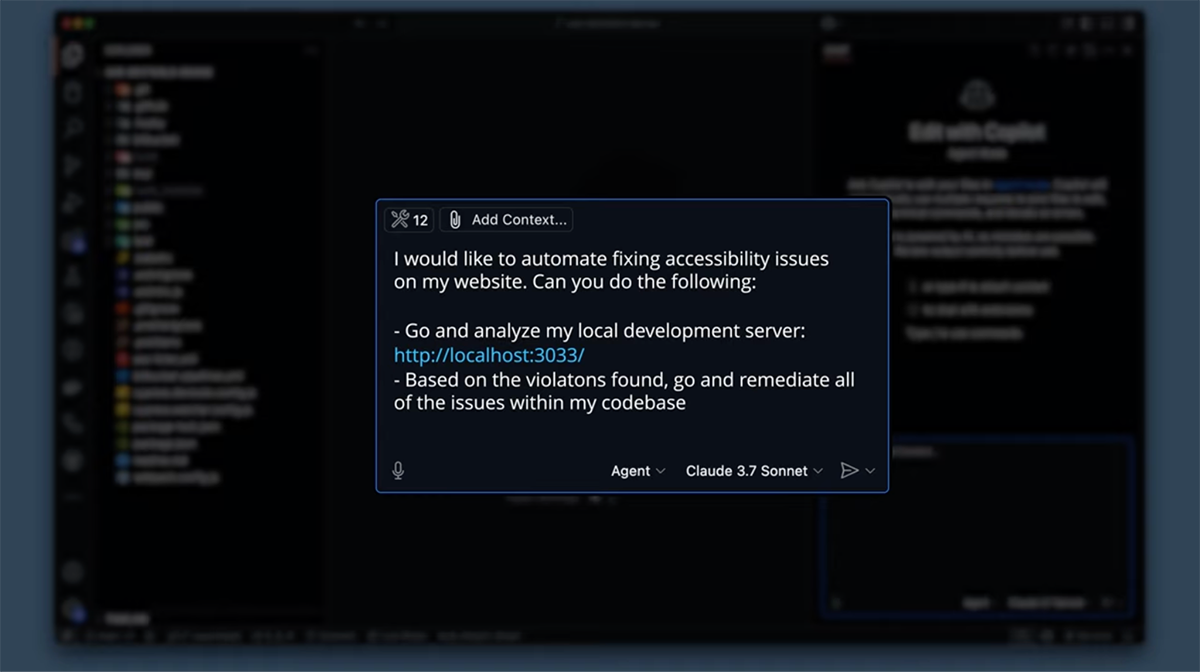

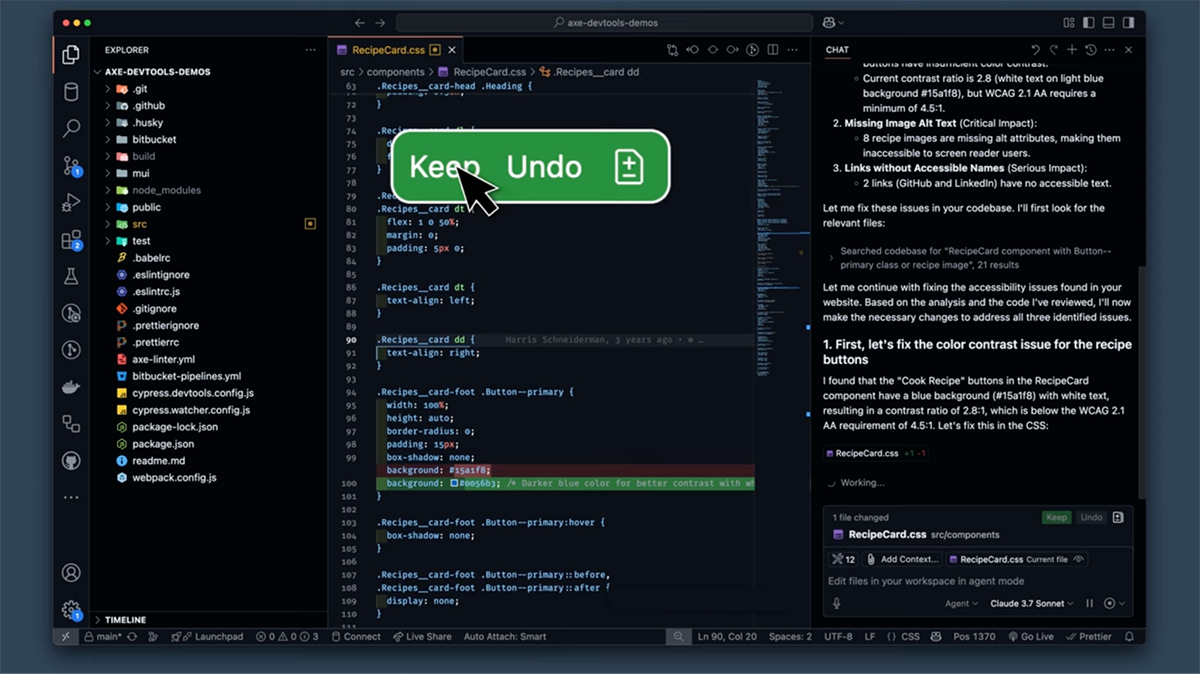

Deque’s Axe developer tools for accessibility cover the entire development cycle from design to deployment. The platform offers countless features to help you create accessible products. And inside this mega tool are three AI-powered features:

- axe MCP Server makes code accessible by connecting the axe Platform to the AI agents you already use

- axe Assistant is an AI chatbot for Slack, built purposefully for accessibility. It’s trained on the industry’s most authoritative source of digital accessibility knowledge

- axe Dev Tools Extension for browsers helps you find accessibility issues fast with AI-powered Intelligent Guided Tests (IGTs) and Advanced Rules

I first tested the axe Dev Tools Extension, which is a browser extension. This one has a free trial. It integrates directly into the Chrome DevTools so you can run it on any site. After signing up for the trial, I downloaded the extension and tested it on my site.

To access it with Chrome, you right-click Inspect and then choose axe Dev Tools from the top menu bar.

This tool has a professional feel and looks very robust. I couldn’t test too many of the features because of the paywall. But if you’re looking for a powerful browser solution for accessibility checking, this is a pro option.

Stand Out Features of Deque’s axe DevTools Extension:

- One-click scanning: Quickly catch accessibility issues on any website

- Intelligent Guided Tests (IGTs): This feature reduces 1 hour of manual testing to 2-3 minutes!

- Built-in remediation guidance: Along with flagging accessibility issues, the tool also offers “how to fix” instructions

- Figma plugin: Aside from remediation scans, there’s also a Figma Plugin for design-phase accessibility checks

- Integrations: Connect axe DevTools Extension with GitHub, CI/CD pipelines and mobile testing

Moving on to the axe MCP Server. This isn’t a tool I could personally test, so my opinions are based on the research I did by reading through all their documentation and watching videos on their channel.

Before finding it, I didn’t even know that there were tools that help developers build accessible code. I believe it’s a powerful tool because it clears issues very early on.

Stand Out Features of axe MCP Server:

- One-click fixes: Accessibility issues are easily fixed directly in your development environment

- Integrations: axe MCP Server connects with GitHub Copilot, Cursor, Claude Code, Windsurf and VS Code

- Single-environment workflow: Eliminates context switching so developers can work end-to-end in one place.

- High-level security: Enterprise-grade security with no data used for AI training

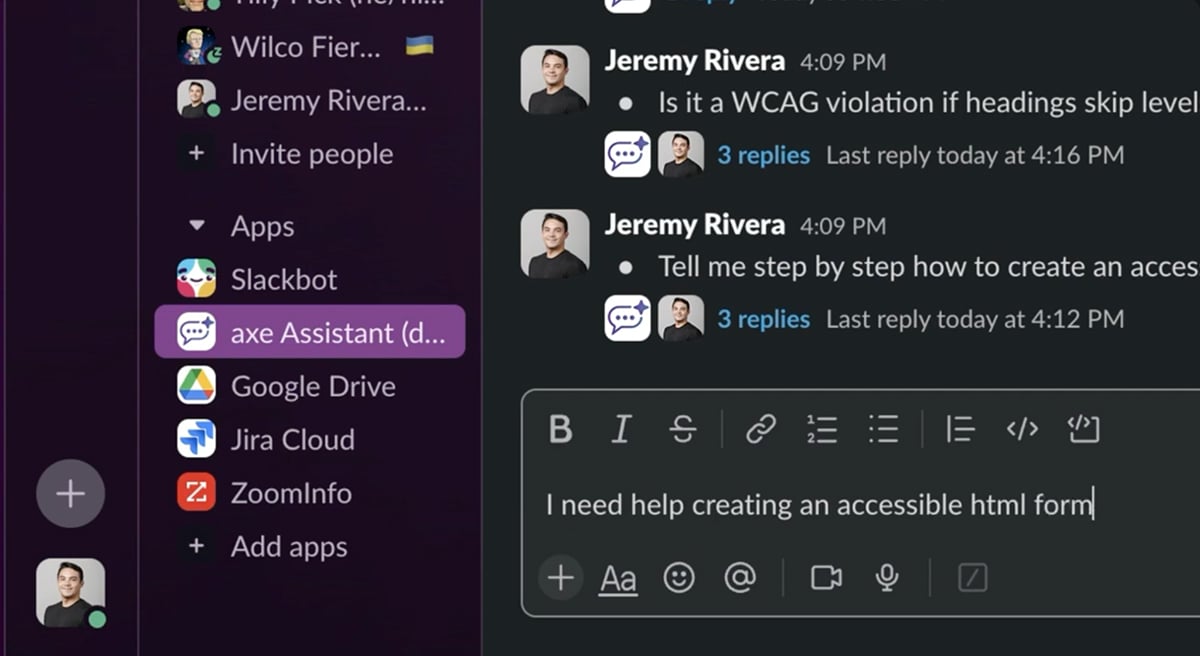

Their third tool axe Assistant, works inside Slack or Teams. Developers can easily open a chat with axe Assistant and get accessible code for a project they’re working on. It seems super practical, and definitely saves a lot of time.

Stand Out Features of axe Assistant:

- Availability: You can access the assistant via web browser, Slack and Microsoft Teams

- Instant answers: The assistant offers instant responses to your accessibility questions, from policy to code implementation

Best for:

Developers, medium to large and enterprise businesses in finance, banking, retail, healthcare, government and telecommunications.

Pricing

- Free: Free forever

- Pro: $60 per user/per month

- Enterprise: Price upon request

5. Equidox AI

G2 rating: N/A

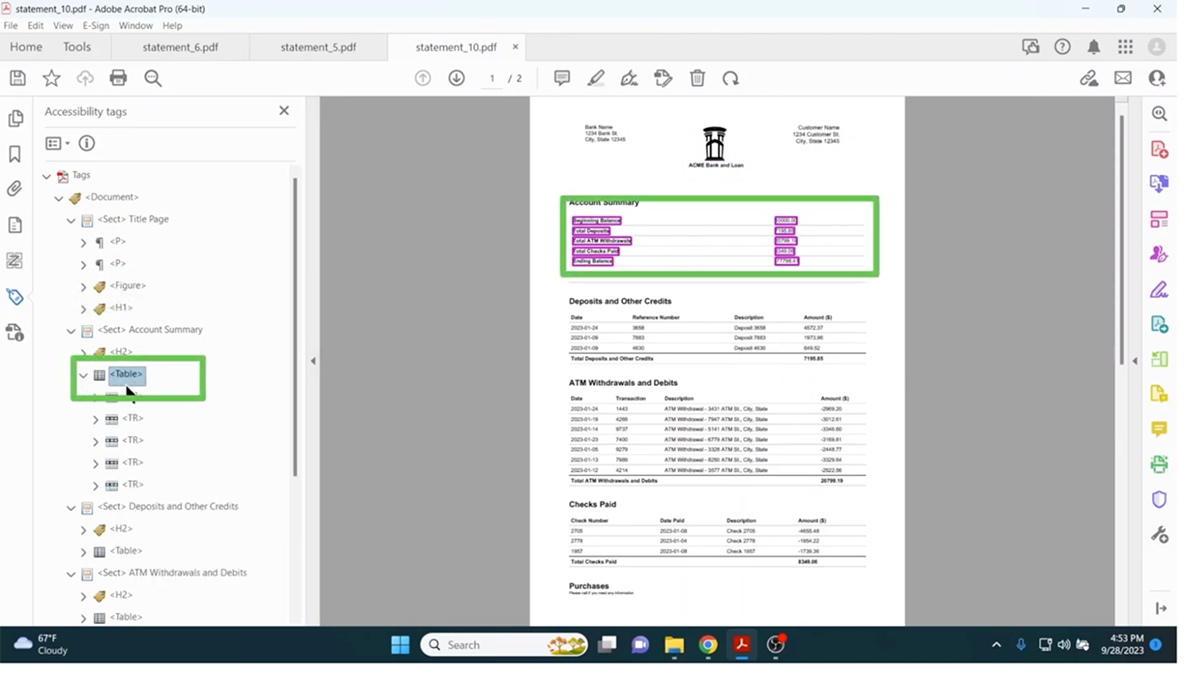

Equidox is a platform that can quickly convert hundreds or thousands of PDFs into accessible, ADA- and Section 508-compliant documents.

Inside Equidox are two systems: Equidox Software, the legacy tool. And Equidox AI, an automated PDF accessibility solution for high-volume, templated documents such as statements, invoices, directories and EOBs.

Equidox AI uses batch processing to scan PDFS and quickly applies remediation across large directories. All this while ensuring proper language settings, proper heading structures, accurate alt text, and the use of small icons for complete accessibility compliance.

Unfortunately, this tool is completely behind a paywall, and there was no way for me to test it. But the demo video published on their YouTube channel is pretty comprehensive and explains in detail how Equidox AI can process PDFs.

Here's what it does: The system scans each PDF, identifies text blocks, images, tables and headings, then applies proper structural tags that screen readers need to interpret the document correctly. It also generates alt text for images and ensures the reading order flows logically from top to bottom.

Without Equidox AI, someone would need to manually remediate each PDF—opening it in Adobe Acrobat or similar software, clicking through every element to add tags, writing alt text descriptions for every image, setting proper heading hierarchy, and testing the reading order with a screen reader. For a single 50-page document, this takes 1-2 hours. For a library of 3,000 documents? That's a month of full-time work.

While other tools emphasize human oversight for accessibility fixes, Equidox AI handles batch processing automatically. For high-volume templated documents like invoices, statements and course packets where the structure repeats, automation makes sense—the alternative is not feasible at scale.

Most of the tools I tested keep you involved; Stark suggests fixes, and Visme flags problems for you to correct. But Equidox AI just does it automatically. For thousands of similar documents, this is perfect. But I wouldn't want full automation on custom content.

Stand Out Features of Equidox AI:

- Fully automated remediation: The system fixes PDFs for accessibility automatically without needing any human intervention

- High-volume capabilities: The platform can quickly handle up to thousands of templated documents such as statements, invoices, directories, EOBs and more spanning millions of pages

- API integration: You can connect Equidox AI with your existing document workflows

Best for

Organizations producing PDFs at scale, like financial institutions, healthcare, government, insurance and education institutions. If you’re creating fewer than 10-20 PDFs/month, manual tools might be more cost-effective.

Pricing

All Equidox pricing is upon request.

6. JAWS Picture Smart AI and FS Companion

G2 Rating: N/A

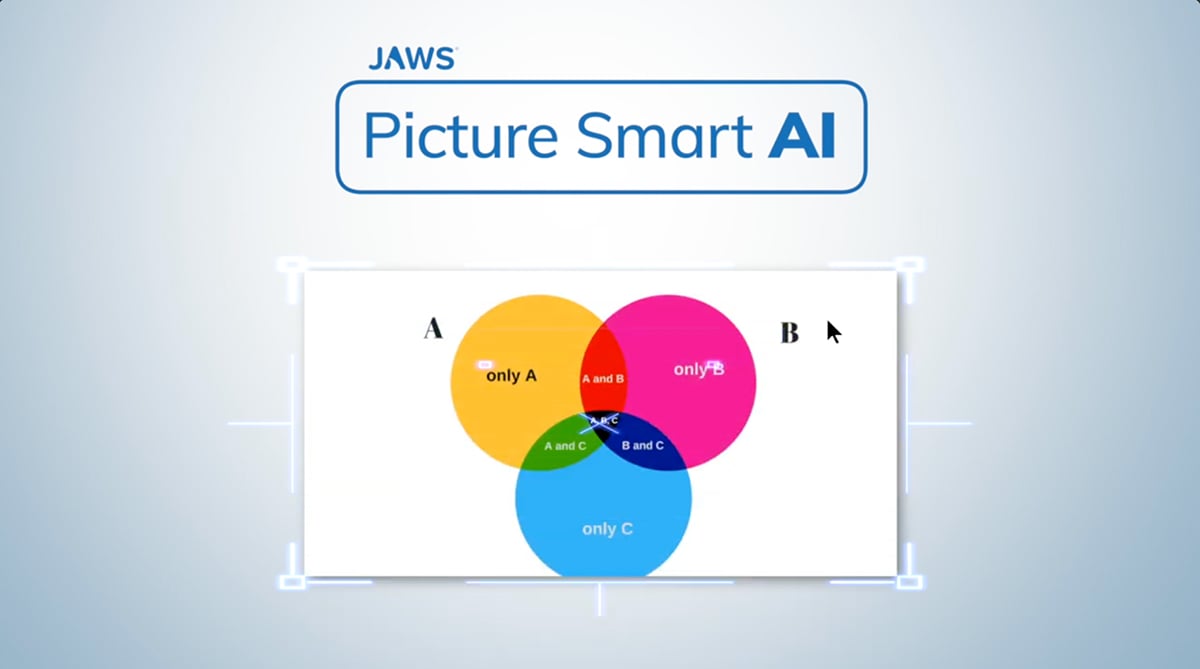

JAWS (Job Access With Speech), developed by Freedom Scientific, is one of the world’s most popular assistive technologies, specifically as a screen reader. After scanning the screen, the system outputs either audio or Braille via a Braille display. Recently, Freedom Scientific introduced two major AI innovations: Picture Smart and FS Companion.

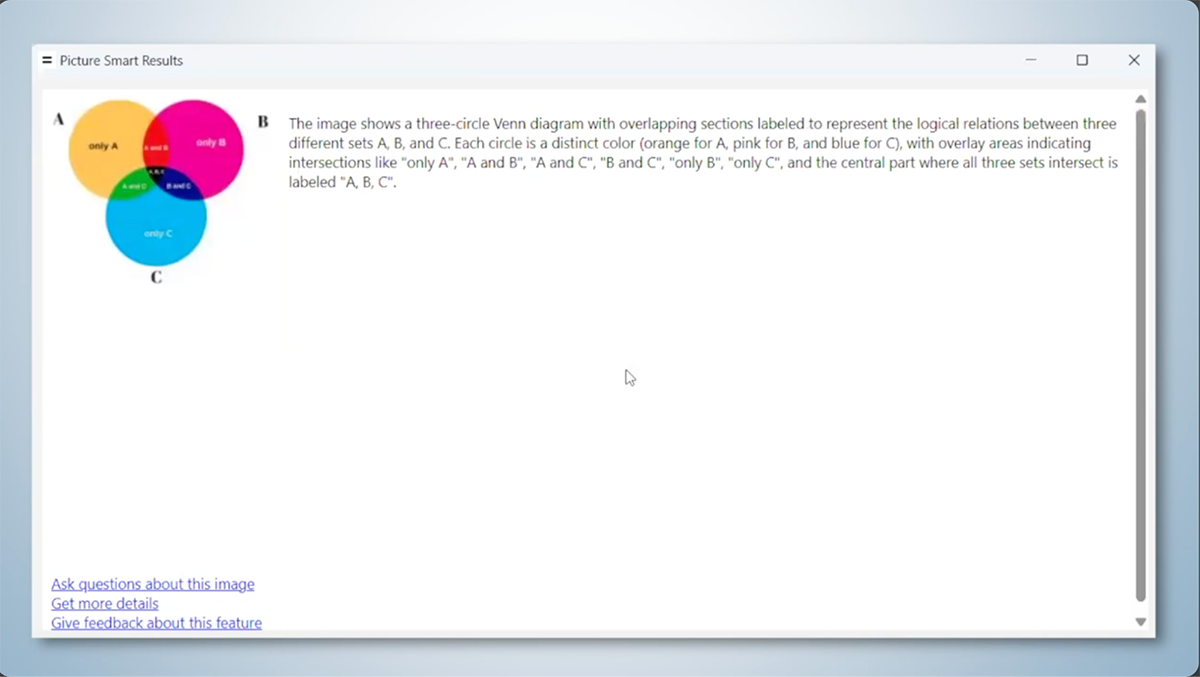

Picture Smart AI uses advanced AI algorithms to generate detailed descriptions of images on web pages, in emails or in screenshots. This is particularly helpful for visuals missing Alt text.

When JAWS Picture Smart AI is activated, it works alongside the regular screen reader, pausing at each visual to scan it and provide results. In the screenshots below you can see how it centers on an image and then outputs an AI-generated description. JAWS then reads it aloud to the user.

After watching their videos, reading through the reviews and considering the success of JAWS, I can confidently recommend it as a strong contender as your screen reader solution.

Stand Out Features of Picture Smart AI:

- Multi-language support: The voices can speak in several languages, including more unique ones like Icelandic, Macedonian, Albanian and Croatian

- ChatGPT integration: Additionally, Picture Smart AI has a ChatGPT API that offers the user the option to ask further questions about the image

- Versatility: It works across browsers, PDFs and screenshots

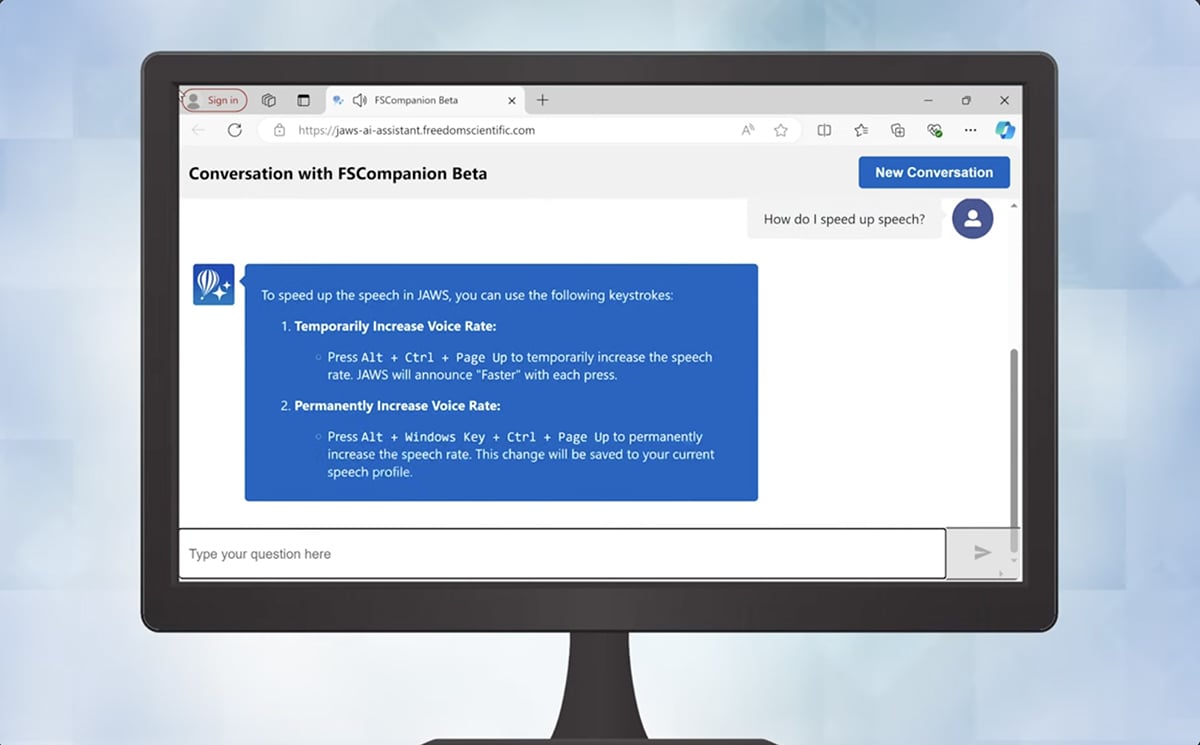

The second AI-powered accessibility tool that works with JAWS is the FS Companion. It’s an AI assistant specifically trained to help JAWS users navigate the tools and functions inside JAWS and other Freedom Scientific software, Microsoft applications and the Windows operating system.

The screenshot below shows that it’s both simple and powerful, a great combination.

Best For

People who are blind or have low vision and need comprehensive screen-reading capabilities. Also valuable for accessibility professionals to test how their content performs with JAWS.

Unfortunately, JAWS doesn’t work on Mac devices. You’ll have to find another tool if you want a Mac-friendly screen reader.

Pricing

- JAWS Home Perpetual: $1548

- JAWS Home Subscription: From $623/year

- JAWS Professional Perpetual: $2,267.50

- JAWS Professional Subscription: From $926 /year

7. Meta Ray-Ban Smart Glasses

G2 Rating: N/A

Meta’s partnership with Ray-Ban produced smart glasses with AI capabilities that support accessibility. By wearing the glasses, people with sight impairments can access information about the space around them and the objects in front of them.

Best of all, they look good!

The two main AI accessibility features in the Meta Ray-Ban Smart Glasses are the integration with the Be My Eyes app and the recently upgraded Detailed Responses.

First of all, let’s talk about the integration with Be My Eyes. When you connect the Be My Eyes app to a pair of Meta Smart Glasses, you can call a volunteer and they will describe what’s in front of you to the best of their ability.

I find this feature groundbreaking because even the detailed responses feature we’ll look at next isn’t that detailed.

With a human in the loop, the disabled user can get real explanations and descriptions. This is super personalized and a huge reason to get a pair of Smart Glasses.

The glasses give you hands-free help, which is completely different from software like JAWS that needs a computer or phone. For navigating physical spaces, like a campus or classroom, hands-free makes way more sense than holding a device.

Stand Out Features of Be My Eyes Integration:

- Voice-activation: Easily connect to human volunteers or AI assistance by asking it aloud. Start with “Hey, Meta”

- Real-time assistance: Volunteers are available quickly to help with navigation and daily tasks

The second AI accessibility tool in Meta Smart Glass is Detailed Responses, which provides descriptions of what’s in front of the user’s line of sight.

In this video, Sam from The Blind Life reviews and explains the updated Detailed Responses feature.

I noticed that even though it’s been improved, the responses are still not that exact and there were a few mistakes. Hopefully, this will continue to improve over time with regular updates.

Features of Detailed Responses

- Voice-activation: Simply ask “Hey, Meta” to get scene descriptions

- Text reading aloud: The system will read text from any surface

- Location identification: Get descriptions of objects and landmarks

- Multimodal AI processing: The combination of AI tools helps with contextual understanding

Best For

People who are blind or have low vision who need hands-free AI assistance for navigation, reading, and scene understanding.

Pricing

- $329-$549 depending on style

- Be My Eyes is free

8. Voiceitt

G2 Rating: N/A

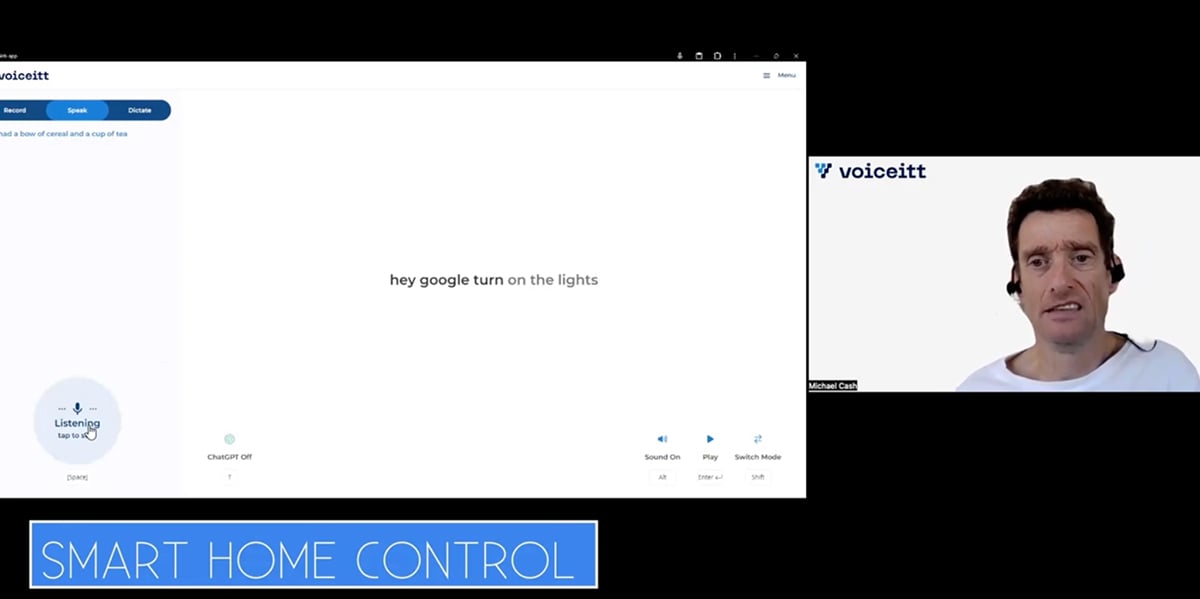

Voiceitt addresses a critical gap in voice technology by helping people with non-standard speech patterns.

This award-winning AI application learns individual speech patterns over time, making voice technology accessible to people with cerebral palsy, ALS, Down syndrome, stroke, or other conditions affecting speech.

It functions as speech-to-text/action software, providing captions for video calls and voice control for smart home devices. It's designed specifically for speech that standard voice recognition can't understand.

Learning to use Voiceitt is easier when you watch their video tutorials. They explain step by step how to set up the system and train the AI to learn your unique voice style. With a few uses, Voiceitt can generate text from your voice, no matter how it sounds.

Stand Out Features of Voiceitt:

- Personalized AI speech models: The system learns your unique speech patterns

- Live captioning: Voiceitt generates captions for you when speaking in a video call on Zoom, Webex, Microsoft Teams and Google Meet

- Voice commands: Use voice commands for smart home devices like Alexa and Google Assistant and also for internet browsers like Chrome

- Privacy-focused: All voice data is processed locally when possible

Best for

People with non-standard speech who want to use voice technology for communication, dictation, or device control.

Pricing

$49.99/month or $499.99/year

Key Types of AI Accessibility Tools & Real Use-Cases

Accessibility tools are separated into two categories: for creators and for users.

Let’s take a look at the most prevalent types in each category.

AI Accessibility Tools For Creators

According to the Digital Learning Institute, “The arrival of AI in assistive technology is transforming accessibility.

From assistive reading and communication tools to mobility aids, AI-powered technologies are empowering people with disabilities to live with more independence and confidence.”

These tools help instructional designers, course creators, and LMS administrators build accessible learning experiences.

Authoring Tools With Integrated Accessibility Tools

Authoring tools are those that help you create any type of product that a user will interact or engage with; for example, websites, PDFs, training presentations, professional presentations, learning platforms, apps and much more.

Regarding AI accessibility, these tools offer solutions for the design and development process. They help you fix issues before they impact the learner’s experience.

For instance, when designing an accessible presentation in Visme for a professional development course, you can easily create:

- AI-generated voiceover narration for each slide

- AI captions for embedded video demonstrations

- Accessibility Checker verification for color contrast and text hierarchy

- SCORM export for seamless LMS integration with accessibility features preserved

The video below shows you how to export your projects ready to add to your LMS.

Accessibility Remediation Software for PDFs

Educational institutions rely heavily on PDFs for syllabi, textbooks, research papers and course packets. Unfortunately, most PDFs aren't accessible by default; screen readers can't interpret them correctly and visually impaired students struggle to access the content.

AI-powered PDF accessibility checkers scan PDFs and either automatically remediate them or suggest changes for the designer to make.

The most common issues you’d find are improper screen reader tagging, heading structures, alt text for images and non-compliance with Section 508 and WCAG 2.1.

Imagine a community college library has 3,000 PDF course packets from the past five years, but none are accessible. Manual remediation would take months and cost tens of thousands of dollars.

Using Equidox AI, the team can process the entire library in weeks, making historical course materials available to students with disabilities.

AI Accessibility Tools For Users | Assistive Technology

These are tools that help users and students with disabilities access content effectively.

Screen Readers

Screen readers convert on-screen text and interface elements into synthesized speech or braille output. With these features, blind and visually impaired students can navigate digital learning environments. It also helps workers with disabilities do their work better.

Tools like JAWS and Speechify are the most widely used and have a good reputation.

Imagine this scenario: a blind graduate student enrolls in an online data science course. The course includes many charts and graphs, but not all have alt text.

JAWS' Picture Smart AI analyzes these visuals and provides detailed spoken descriptions, allowing the student to understand the data without waiting for the instructor to add proper alt text.

If a student has trouble using JAWS with Excel for an assignment, FS Companion is there to help right away, so they don’t need to ask someone else for help.

Eye Tracking Devices

Eye-tracking technology helps students and users with limited mobility to control computers solely with their eyes. This is a critical tool for accessing LMS platforms and websites. Likewise, they help with completing assignments and participating in online discussions.

Modern AI-powered eye trackers like Tobii use physical technology and machine learning to improve accuracy over time, learning individual gaze patterns and adapting to different lighting conditions.

These systems can control LMS navigation, type responses in discussion forums and even control virtual classroom software.

Real use case: A student with ALS uses a Tobii eye tracker to participate fully in online coursework. The AI-enhanced system which includes a pair of smart glasses with sensors and a computer application that connects to it, tracks where the student is looking by analyzing movement.

With this assistive technology, students with many types of disabilities can navigate Canvas, read course materials, submit written assignments using gaze-controlled typing, and participate in video conferences; all without physical keyboard or mouse interaction.

Speech to Text tools

Speech recognition tools convert spoken words into written text, supporting students with dysgraphia, mobility limitations or those who process information better through speaking than writing.

Voiceitt stands apart because it works for students and users with non-standard speech patterns, like those with cerebral palsy, Down syndrome, stroke effects, or other conditions that affect speech clarity.

Let’s say a student with cerebral palsy enrolls in an online writing course. Standard dictation software can't understand their speech. This makes classroom participation nearly impossible.

With Voiceitt, the AI learns their unique speech patterns over several training sessions. Now they can dictate essays, participate in online discussions via voice-to-text and complete timed writing assignments.

Switch devices and Alternative Input Systems

Switch devices allow students and users with severe motor limitations to control computers using whatever movements they can reliably perform, such as head movements, eye blinks, breath control or single-finger movements.

Modern AI-enhanced switch systems like Cognixion ONE use brain-computer interface technology combined with AI to interpret user intent from minimal physical signals. These systems can control LMS navigation, operate word processors for assignments and even facilitate real-time communication in virtual classrooms.

Picture this: A student with locked-in syndrome uses Cognixion ONE to complete an online degree program. The AI-powered system interprets their subtle head movements and eye gaze to navigate Canvas, submit assignments, participate in discussion boards and take exams.

Trends & Future of AI in Accessibility

You can be sure that AI in accessibility is going far and fast.

Neil Milliken, Vice President and Global Head of Accessibility & Digital Inclusion at Atos shares his experience:

“As someone deeply passionate about creating inclusive digital workplaces, and as a long-term user of assistive technology, I’ve witnessed firsthand how technology can both empower and exclude. The last decade has seen remarkable advances, but as we look ahead, I’m convinced the next 10 years will be an even bigger leap, especially when it comes to assistive technologies powered by AI.”

As part of this leap, the following trends are significant now and will only get bigger in the future.

AI-Powered Accessibility and Automation as Baseline Expectations

A few years ago, AI was a novelty. Little by little, existing accessibility tools added AI capabilities to improve their offerings and enhance automation. Then AI-first accessibility tools entered the market, offering even more flexibility for both creators and users.

At this point, AI-powered accessibility automations and features are becoming baseline expectations.

Now available in some tools, AI accessibility features will eventually be part of the majority of tools. Automation will also be more widespread, with AI accessibility checkers working in the background and surfacing issues when they happen.

Designers and developers will soon have accessibility top of mind, rather than just another item on the checklist.

Multimodal AI is Making Assistive Technology More Powerful

The convergence of vision AI, natural language processing, and contextual reasoning creates unprecedented possibilities for students, workers and anyone with disabilities.

Meta's smart glasses demonstrate this convergence by combining scene recognition, natural language interaction and real-time translation. For learners, this means seamless access to both digital course materials and physical learning environments without juggling multiple specialized devices.

Similarly, JAWS' Picture Smart AI combines computer vision, natural language generation and educational context awareness to automatically describe photos, charts, diagrams, and infographics in course materials, presentations and documents.

Increased Personalization In AI Accessibility

Generic accessibility software and other solutions often fail users with unique needs. AI's ability to learn individual patterns and preferences makes accessibility more effective than one-size-fits-all approaches.

Voiceitt exemplifies this trend. Their AI adapts to each student's unique speech patterns by learning individual pronunciation, rhythm, and articulation over time, becoming more accurate with use.

AI-powered accessibility tools are beginning to adapt content presentation based on individual accessibility needs. The UserWay widget, for example, helps users adjust text size for low vision, switch to high-contrast modes, or prioritize transcripts over videos for deaf users.

AI Accessibility Tools FAQs

AI powers accessibility through four main technologies:

- Machine Learning analyzes patterns in data to improve accuracy over time, adapting to individual users and behaviors.

- Natural Language Processing (NLP) enables AI to understand and generate human language, powering features like automatic captions and alt text generation.

- Computer Vision allows AI to interpret images, recognizing text, objects, and visual structures in digital content.

- Artificial Neural Networks replicate how the human brain processes information, enabling complex tasks such as scene understanding and natural speech synthesis.

Savings vary by institution and project size, but AI accessibility tools do make an impact:

- Caption generation: AI reduces hours of manual transcription to much less time through automated generation, eliminating the need for expensive outsourced transcription services.

- PDF remediation: Automated tools process thousands of PDF documents exponentially faster than manual tagging while costing significantly less than outsourced remediation services.

- Accessibility testing: Automated scans replace lengthy manual checks, freeing up design time and reducing overall project costs.

- Legal risk prevention: Proactive AI tools cost a fraction of the potential costs of accessibility lawsuits while catching issues before they become compliance problems.

- Development efficiency: Finding and fixing accessibility issues during development saves time and money compared to the cost of expensive post-launch remediation.

AI accessibility tools are designed to help you achieve compliance with WCAG 2.1, Section 508, and ADA standards, but they’re enablers, not guarantees. You’re still responsible for the final result.

For optimal results, use AI tools during creation, run automated testing, and conduct manual verification with screen readers. AI dramatically improves compliance success rates, but works best as part of a comprehensive accessibility strategy.

AI removes traditional barriers for students with disabilities in the following ways

- AI adjusts text complexity, reading level and presentation format based on individual needs. For dyslexic students, content is reformatted with accessible fonts and spacing. For students with ADHD, long readings are chunked into smaller sections.

- AI generates closed captions for videos, transcripts for audio and detailed image descriptions. Tools like Visme let educators create accessible training presentations with built-in captions and audio versions of the text.

- Speech-to-text like Voiceitt allows students with speech disabilities to participate in discussions. Screen readers like JAWS help blind students access materials independently. AI tutors provide 24/7 support tailored to each student’s understanding level.

- AI enables alternative methods for students to demonstrate knowledge through voice, adaptive interfaces or whatever input method works for them.

Elevate Your Accessible Designs with Visme

AI is improving accessibility from both sides.

For users with disabilities, AI-powered assistive technologies like screen readers and speech recognition are enabling greater independence and participation in education and work.

For educators and content creators, AI accessibility tools make it faster and easier to build inclusive content that reaches every user.

But creating accessible content shouldn't mean juggling multiple tools or adding extra steps to your workflow. You’ll find that the most effective approach is to choose a platform that embeds accessibility into creation from the start.

With AI-powered features like AI text-to-speech and automatic captioning, Visme helps you create inclusive content for students, readers, users or workers with visual and hearing impairments.

Ready to make accessibility natural in your design process? Explore Visme's accessibility features and start creating content that works for everyone.

Create Stunning Content!

Design visual brand experiences for your business whether you are a seasoned designer or a total novice.

Try Visme for free